How can behavioral science be used to improve the assessment of risk and safety?

LESSONS FROM OTHER FIELDS

How can behavioral science be used to improve the assessment of risk and safety?

By Elspeth Kirkman, Senior Director of Health, Education & Communities for the Behavioral Insights Team

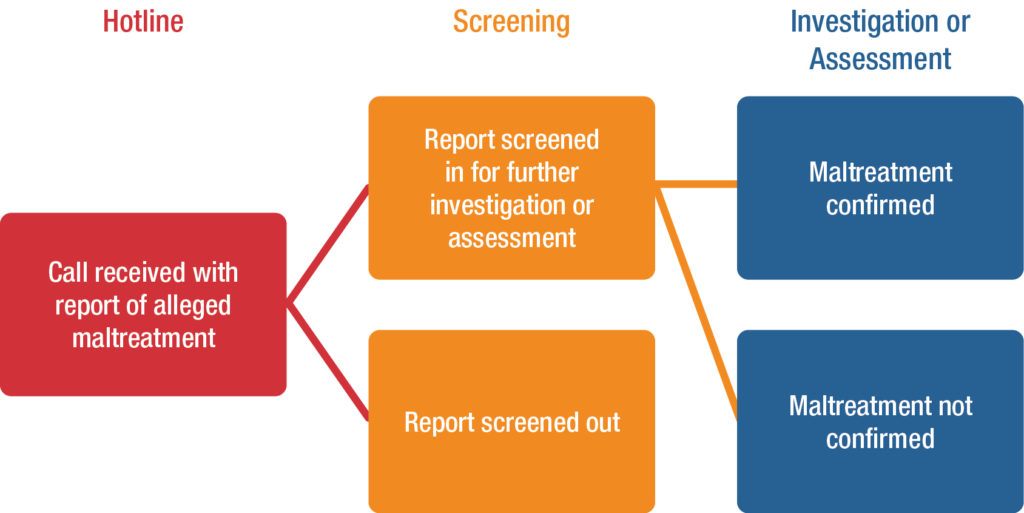

In 2017, child welfare agencies across the country received 4.1 million referrals of alleged child maltreatment, 58 percent of which were subsequently screened in for an investigation or assessment, representing an increase of almost 15 percent compared to 2012.1 The sheer volume of referrals and their steady increase over time put enormous pressure on the system, threatening its ability to deliver positive outcomes. Even with child maltreatment prevention efforts underway to reduce entries into the system, sharpening staff ability to consistently and accurately assess safety and risk at all stages is critical to ensuring that issues are correctly identified, scarce resources are allocated to the children and families with the highest needs, and those not needing intervention experience minimal disruption to their lives.

Assessment processes involve myriad decision points, from whether to accept a referral for investigation, to the order in which home visits are scheduled. Although there are many different approaches that exist across the country, these decision points and their associated challenges are common. This brief explores the benefits behaviorally designed processes can offer at three key decision points on assessment: 1) the hotline, where reports of maltreatment are initially received; 2) the screening decision, where a determination is made about whether further investigation or assessment is necessary; and 3) the investigation or assessment process, which seeks to determine whether the report of maltreatment is substantiated.

Front End Decision Points

Safety first

Practitioners face many barriers/challenges to making the right decision for each child every day, such as processes that promote risk aversion, to administrative costs associated with decisions that might allow a child to stay at home, or overly specified legal definitions of maltreatment. When thinking about redesigning each decision point in the system, understanding the effects of those changes on effective assessment is critical. There are, of course, already excellent approaches that address these challenges. For example, child welfare agencies are finding that Safety Organized Practice can drive dramatic improvements, providing staff at all levels with the tools, structure, and team support they need to stay focused on what is best for the child. Such an approach highly complements behavioral design principles and could perhaps be strengthened further by the concepts described throughout this brief.

Safety Organized Practice (SOP) emphasizes the importance of teamwork and child-centricity in child welfare. A core belief of SOP is that all families have strengths.SOP aims to build relationships between the child welfare agency and the family, strengthening this partnership through involving information support networks of friends and family members. The goal is to work together to find solutions that ensure safety, permanency, and well-being for children. SOP is informed by an integration of evidence-based practices and approaches.

Strategies to address hotline calls

Reducing inappropriate calls and contacts

Hotlines receive many calls that concern matters beyond the purview of child welfare. Finding ways to discourage unnecessary contacts while ensuring that proper calls are not excluded is a constant challenge. In many states, this issue is exacerbated by the fact that all contacts must be logged and local policy includes a supervisory review of all cases that are not put forward for investigation. This means that if the fraction of inappropriate calls is high, a supervisor could spend more time reviewing unnecessary contacts than truly concerning cases.

Research in similar settings shows that even a slight pause in response time to phone calls can lead to a dramatic reduction in the proportion of inappropriate calls. For example, an analysis of calls to a local non-emergency reporting line in South Wales2 shows that inappropriate calls are roughly halved by a three-second pause – or roughly a single ring on the line. The proportion of inappropriate calls was reduced even more dramatically when the caller waited for at least six seconds for the call to be answered; around 40 percent of calls answered within one second were inappropriate, compared to just 10 percent of those that waited at least six seconds. It would appear that just hearing the phone ring is enough to prompt many inappropriate callers to drop off the line, whereas the more serious callers understandably are not so easily dissuaded.

Improving the quality of reports and the efficiency of hotline calls

Even callers whose reports eventually move on to investigation may not have the right information on hand before they start their hotline call. This puts cases at risk of being wrongly rejected and takes up time that could be spent on other activities or calls. Simple cues while the caller is on hold may be enough to ensure that cases can be assessed faster and more thoroughly when the conversation starts. Research from actual physical queueing shows that environmental signals, such as queue guides or carpet runners, can prompt those in line to begin preparing for the task at the end of the line.3 The theory on why this works is that people zone out and fail to notice the passage of time while in line; small changes — whether physical or sensory — can jog people to attention and prompt them to think about what they need to do next. Using audio signals, such as a change in announcer voice or music, to trigger getting the necessary information ready may be more effective than simply telling those on hold to prepare.

Strategies that impact initial screening decisions

Reducing over-referral to investigations

One of the major differences in child welfare hotlines around the country is with staffing. Some hotlines, like Arkansas, employ newly graduated social workers, using the position as an introduction to case work practice. Others, like San Diego County, employ social workers with considerable experience in the field, viewing this experience as critical to proper assessment of a child maltreatment report. All kinds of factors, from workforce composition to the level of specificity in the state’s definition of maltreatment, shape these staffing decisions. However, regardless of the staffing model, those who work on the hotline will generally over-refer or under-refer at least some fraction of their cases. Over-referral burdens staff tasked with investigating in the field, while under-referral may leave children at risk. Research from other fields shows that individualized feedback on the outcome of decisions can be a critical factor in helping staff to improve their assessment skills. For example, giving doctors in the habit of over-prescribing antibiotics a single instance of feedback that they were making different decisions from their peers was highly effective in correcting this behavior.4 Such mechanisms can be easy to implement in hotline environments that use newer technologies where referrals are often tracked through investigation and beyond, meaning that hotline staff members can be sent automated reports on their effectiveness and how they compare to their peers, and the downstream outcomes for children and families.

Reducing bias in case reviews

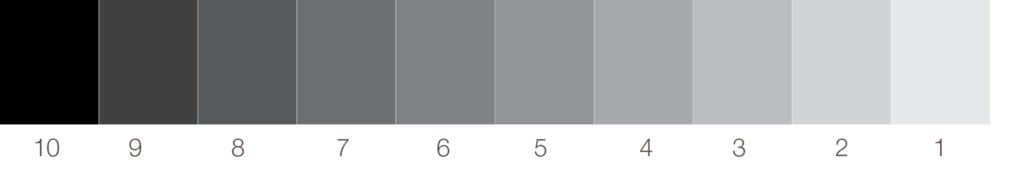

Research on decision science shows that processes that demand sequential decisions on independent cases can result in biased assessments. For example, in recruitment, evidence shows that an assessment of a candidate can be influenced by the quality of the candidate whose resume was reviewed just prior. Seeing an excellent candidate right before penalizes the next applicant, while seeing a bad candidate right before gives that same applicant’s chances a boost.5 This phenomenon is demonstrated below through two sets of grayscale charts.6

First, take 10 seconds to examine the horizontal chart below:

Now, without referencing the horizontal chart, look at the vertical gray squares below and try to determine the corresponding shade number from the horizontal chart:

Can you confidently say which number each shade corresponds to? In fact, when each shade is shown one by one, a person’s frame of reference adjusts to the last square the person saw: the judgment is literally colored by the example that came right before it. While case assessment is certainly more complex than ranking shades of gray, the point remains: the order of the cases, the state of attention spans, and the weight placed on different factors can all impact a person’s judgment, meaning that a person might not draw the right conclusions. This is relevant for staff in both hotline and investigation teams dealing with multiple cases day in and day out. Technological assistants, such as predictive tools that ignore the order in which the case arrived and include the factors that really mattered from the case history, can be a great help in offsetting the bias that results from ordering effects.7 While these technologies are not yet available everywhere and may not be for some time, the good news is that relatively simple changes to process design can help, especially for reviews or quality checks where there may be less time pressure and more control over the order in which information is seen.

One approach is comparative judgment.8 To understand this, now consider a different way of assessing color comparisons. Which of the colors in the pair below is darker?

How about this pair?

It is much easier to make a judgment when there is a single point of comparison. A larger number of rapid comparative judgments like this can quickly flush out the objective rank order of colors to reproduce the scale. In one child welfare agency, the night supervisor for the hotline had a secondary task to review every case that came into the hotline over the course of the day to verify recommendations. In these instances, a comparative judgment approach that presents cases side-by-side for comparison may be more effective at establishing the right priority order than sequential review. Of course, there may be logistical challenges in implementing such an approach and it may not be a fit in all settings. At a minimum, ensuring that supervisors or those conducting secondary reviews of cases review in a different order than the initial worker may help break biases in assessment resulting from ordering effects. For example, if a caseworker deemed a borderline case as low risk because the previous case was especially high risk, then a supervisor is less likely to repeat the mistake if review of those same set of cases is done in a different order.

Strategies that impact investigations and assessments

Improving process adherence and quality information gathering

Social workers who assess household safety during investigation are often required to balance complex tasks involving expert judgment with a number of simple activities, such as checking for running water and working electricity in the home. Beyond this, they also have to pay attention in the moment while documenting what they observe during their visit. Although note taking and checking a faucet are arguably simpler than assessing whether a home environment is safe for a young child, these basic tasks can be easy to forget without effective and timely prompts. This issue is not unique to child maltreatment investigations. Research shows that the way in which staff — from pilots to surgeons — are guided through routine tasks can make the difference in the overall quality of their work. Hospitals, for example, have successfully reduced deaths and complications resulting from surgery by implementing checklists that remind staff to perform simple actions like hand-washing or checking that wounds are free of surgical accessories before suturing.9 Similarly, changing the form physicians use to write prescriptions was enough to reduce the number of errors and increase the amount of key information, such as the doctor’s phone number.10

Too often, social workers are given either printed or electronic case notes but nothing in the way of a structured visitation guide or space in the printed or electronic form to record notes. Simply providing a checklist, prompting questions, and room for documenting the visit could go a long way to improving the quality of information captured, reducing the need for follow-up visits, and minimizing work when translating notes into the system.

Conclusion

Prioritizing safety, keeping children from disruption whenever possible, and managing the enormous volume of referrals is incredibly difficult. However, applying small tweaks at each stage of the process can make a big difference in improving the efficiency and effectiveness of work done from intake to assessment.

1 U.S. Department of Health & Human Services, Administration for Children and Families, Administration on Children, Youth and Families, Children’s Bureau. (2018). Child Maltreatment 2017. Available from https://www.acf.hhs.gov/sites/default/files/documents/cb/cm2017.pdf

2 https://www.bi.team/publications/the-behavioural-insights-teams-update-report-2015-16/

3 Zhao, M., Lee, L., & Soman, D. (2012). Crossing the virtual boundary: The effect of task-irrelevant environmental cues on task implementation. Psychological Science, 23(10), 1200-1207.

4 Hallsworth, M., Chadborn, T., Sallis, A., Sanders, M., Berry, D., Greaves, F. & Davies, S. C. (2016). Provision of social norm feedback to high prescribers of antibiotics in general practice: a pragmatic national randomised controlled trial. The Lancet, 387(10029), 1743-1752.

5 Described at https://medium.com/finding-needles-in-haystacks/hiring-honeybees-and-human-decision-making-33f3a9d76763

6 Inspired by https://dev.nomoremarking.com/demo?countryCode=US

7 Initially documented: Rowe, P. M. (1967). Order effects in assessment decisions. Journal of Applied Psychology, 51(2), 170.

8 Jones, I., Swan, M., & Pollitt, A. (2015). Assessing mathematical problem solving using comparative judgement. International Journal of Science and Mathematics Education, 13(1), 151-177.

9 Haynes, A. B., Weiser, T. G., Berry, W. R., Lipsitz, S. R., Breizat, A. H. S., Dellinger, E. P., ... & Merry, A. F. (2009). A surgical safety checklist to reduce morbidity and mortality in a global population. New England Journal of Medicine, 360(5), 491-499.

10 King, D., Jabbar, A., Charani, E., Bicknell, C., Wu, Z., Miller, G. & Darzi, A. (2014). Redesigning the ‘choice architecture’ of hospital prescription charts: a mixed methods study incorporating in situ simulation testing. BMJ open, 4(12).

This article is the second in a four-part series on decision-making and behavioral science in child welfare. The series looks at lessons from other fields and considers their relevance at critical steps in the child welfare system.